Seismic Processing using Deep Learning on the Desktop

Lundin Energy Norway’s GeoLab (now AkerBP Norway) chose to develop a simple desktop application using the simple Tcl/Tk user interface library bundled in Python's standard library. When opened, this application connects to their desktop Petrel using Python Tool Pro and presents a list of input seismic cubes, together with a list of pretrained tensorflow models for various 3D seismic postprocessing schemes.

While machine learning (ML) and deep learning is still in its infancy, utilising deep-learning architecture can give enormous performance increases with today's hardware on calculation-heavy workflows. Read on to get insight in how Cegal’s Python Tool Pro combined with Petrel applications gives geoscientist at Lundin Energy Norway powerful tools on their desktops.

Introduction and Context

The history of machine learning (ML) in the 2010s and 2020s is characterized by a continued increase in interest and investment in the field. This is driven in part by the continued advancement of computing power and data storage capabilities, which has made it possible to train increasingly complex models. Additionally, the 2010s saw the rise of deep learning, a subfield of machine learning that focuses on learning representations of data in multiple layers. Deep learning has been responsible for many of the most impressive ML achievements in recent years, such as the creation of self-driving cars and the defeat of a professional Go player by an AI system.

In the 2020s, ML is expected to continue to grow in popularity and impact. One area of particular interest is reinforcement learning, which has the potential to enable agents to learn how to optimally solve complex tasks by trial and error. Additionally, ML research is increasingly focusing on the development of explainable AI systems, which can provide insight into how and why they make the decisions they do.

——

The previous two paragraphs were written (and copied into this essay without edits) by GPT-3, a publicly-accessible language model with 175 billion hyper-parameters. The field is in its infancy and already produces impressive, sometimes magical, results. Although the most visible applications of this nascent technology are in language- and image generation, nearly every vertical is investigating how to apply this technology in their more focused domains. Most observers expect ML to have transforming effects on parts of the business during the upcoming decade, and in the geoscience sector, there is a focus on seismic conditioning, inversion and interpretation, and well- and rock modeling.

Using Python to develop models

The underlying mathematics of deep learning is both simple and complex: simple in its core concepts, but complex in both the sheer scale of the models and in the number of steps in a particular architecture. For historical reasons, Python has emerged as by far the most popular language platform for developing and running these models. The Python language excels in allowing the user to write expressive code in a readable and forgiving syntax.

Libraries such as numpy, scipy and scikit-learn were all developed in the past two decades to take advantage of these characteristics. To ameliorate Python's poor performance, the cores of these libraries are written in low-level C, C++, or assembler (in the case of the most performant matrix-multiplication routines) – another advantage of Python is that in addition to being excellent for 'glue' code at a high level, it has a well-understood and supported interface to low-level code.

It's easy to write expressive and maintainable code using these libraries for calculation-heavy workflows which also has excellent performance. In recent years, this trend has continued with specialized deep-learning libraries, especially tensorflow and its more high-level companion keras. The core of tensorflow compiles a description of the deep-learning architecture into code running either on the CPU or the GPU where available, giving enormous performance increases with today's hardware.

Cegal Python Tool Pro

Cegal Python Tool Pro (PTP) is part of Cegal's Prizm data science suite. It allows read and writes access to data in a Petrel project via a Petrel plugin and a simple Python API. This access is generally available via a domain model (Grids, SeismicCubes) and its properties, and also via Pandas data frames. This makes Petrel geodata available in an easy-to-use form that interoperates with the wider ecosystem seamlessly.

Python Tool began as part of Blueback Toolbox and used an embedded Python interpreter called IronPython. Although this gave excellent integration and good performance, the non-standard IronPython was unable to use libraries such as numpy or tensorflow. To satisfy our customers' needs for a standard Python environment, we developed Python Tool Pro. This allows the user to use the Python version and environment of their choice, whether Anaconda, Enthought, vanilla Python, etc., together with any libraries they need and any Python IDE they choose, although JupyterLab is a common choice.

The latest Python Tool Pro release is part of Cegal's new Prizm suite, which includes Cegal Hub. Now, the user is not limited to working with Python and Petrel on the same machine - Petrel can be hosted anywhere, as can Python. Using Python code, the user can read and write data from Petrel, open different projects in a Petrel, and even start and stop Petrels in its GUI or headless mode. It's easy to use Python and Petrel together on the desktop, and it's just simple to run a Python application in the cloud which connects to a Petrel installed on a headless server on-prem.

Integrating Python Tool Pro with Deep Learning

Lundin Energy Norway’s GeoLab (now AkerBP Norway) chose to develop a simple desktop application using the simple Tcl/Tk user interface library bundled in Python's standard library. When opened, this application connects to their desktop Petrel using Python Tool Pro and presents a list of input seismic cubes, together with a list of pretrained tensorflow models for various 3D seismic postprocessing schemes (e.g., denoising, multiple attenuations, spectral balancing, etc.).

When the user presses "Apply", the new conditioned cube - e.g., denoised - is automatically created in the Petrel Project. Appropriately, they chose to call this app 'OneClick' as that's all it takes (Bugge et al., 2021) [1].

The geophysicist doesn't need to know any details of Python - or indeed advanced deep learning algorithms - but can stand on the shoulders of the work the core research team developed. This approach saves many hours of repetitive, tedious work by the geoscientist and reduces the need for other expensive monolithic seismic processing applications.

It also allows the research team not only to iterate quickly in a familiar, productive environment but also to deploy new models and techniques to the rest of the business quickly and with the minimum required training.

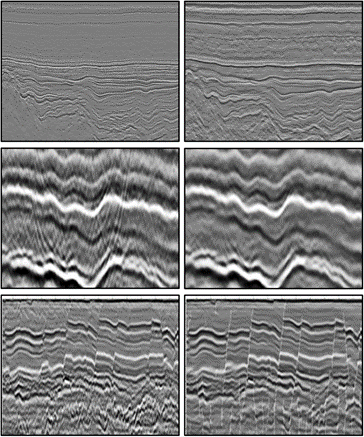

The figure shows seismic examples before and after (1) spectral balancing, (2) removal of processing artifacts, and (3) fault identification with MDP. [2]

How does the application work? At its heart, it is very simple. The user interface is built using the simple and well-documented Tcl/Tk library bundled with Python and when launched, it uses the Cegal Prizm Python Tool pro package to connect to the currently open Petrel project on the user’s desktop. Retrieving all the available input seismic objects is as simple as.

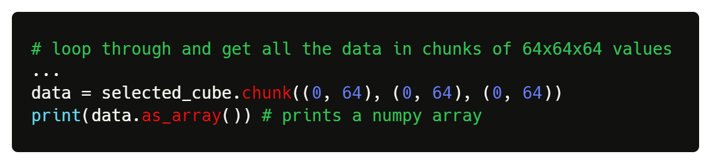

Next, the app reads data in chunks from the selected seismic cube using a straightforward API. The developed Tensorflow inference models require data in certain 'patch sizes', so the app retrieves data from Petrel in specific chunk sizes.

Of course, it's always a bit more complicated in the real world. The models might not work well at the edges of cubes, or with missing data - and the seismic cube is unlikely to be an exact multiple of the patch size. So the app stitches chunk together, perhaps applying smoothing or filling in gaps where appropriate for the exact needs of the selected model.

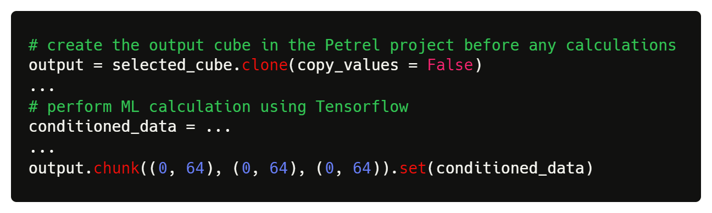

This data is then passed to the Tensorflow model to perform inference – i.e., to calculate the denoised/de striped/conditioned result - and then it's a simple matter of unstitching the data as required, and sending it back to Petrel:

Cegal has implemented a simple example of such a workflow as a notebook (not as a one-click application) at Cegal Prizm’s online documentation site where you can see all of the details.

Positive takeaways and further possibilities

Where can Lundin/AkerBP go from here? In addition to investing in developing more sophisticated time-saving ML algorithms, there are still plenty of opportunities to improve the efficiency of the whole workflow.

Deploying Python applications on the desktop can be tricky for IT departments, so they have worked with Cegal to explore creating bundled Python environments and applications in a simple standard MSI installer which the IT department can manage with their normal processes.

But the geoscientists' desktop machines are not necessarily super-powerful, and Lundin/AkerBP have large clusters of HPC machines available. The 'inference step' of the workflow - the Tensorflow part of the code at its heart - is stateless and so can be easily installed over a large number of machines to speed up the calculations of a whole cube. Cegal and Lundin/AkerBP have prototyped a simple 'compute server' which could be installed on these clusters; the desktop application would replace one line of code with a simple HTTP request call to a cluster proxy server and the user may get instant speed-ups.

Rolling out versions of desktop applications can be a burden, however, even if the computing is performed elsewhere. Modern web applications can be deployed and updated without any knowledge or interaction with the users, and here the use of Cegal Hub (together with the authorization service Cegal Keystone) could be used to provide a simple webpage on an on-prem or cloud-based server that connects to the user's desktop Petrel and performs the workflows - no installation required at all.

And finally, it's possible that there is no need for the user at all in this workflow. Using PTP and Cegal Hub, the lead geophysicist can write an application, or maybe just a Jupyter notebook, which performs the conditioning on a set of cubes in a set of Petrel projects automatically - opening, transforming, and saving each project automatically every few weeks or whenever the need arises.

Read more about our Data Science: Prizm solutions >

[1]Aina Juell Bugge, Jan Erik Lie, Andreas K. Evensen, Espen H. Nilsen, Fredrik Danielsen, and Bruno Gratacos, (2021), "One-click processing with synthetically pre-trained neural networks," SEG Technical Program Expanded Abstracts: 1851-1855. https://doi.org/10.1190/segam2021-3583313.1

[2]Ibid.